Moving web servers with Docker containers, theory and practice

Anyone who rents a virtual server or cloud server has to replace it for a new operating system from time to time. At the latest, when updates are no longer provided for the operating system, a server change is inevitable. Some time ago, I switched all websites to Docker containers, which should speed up the move. First, some theory on my thinking, then how my server move went last time. Additionally, I created a video about my last server move, see YouTube video. If you want to skip the theory, you can go directly to: practice of my last server move . If you want to skip the theory and my thoughts, you can go straight to the practice of my last server move.

Why Docker?

Originally, a new server also brought with it a new PHP and MySQL version, and it wasn't uncommon for me to have to tweak quite a few things on the websites and server settings during a server move to get them working properly again. With Docker, there are no such surprises because the containers behave identically on all hosts. The containers can be patched regardless of the operating system. In addition, Docker makes it very easy to use a reverse proxy for adding https SSL certificates, see: secure https connection: Traefik Reverse Proxy + Let's Encrypt.

For server switching, two challenges arise, even with Docker:

- transferring data from one server to another

- changing access to the new server

Transferring Docker images or the actual containers: doesn't have to be in my case.

I created a docker-compose.yml file for each site. The files contain all the information for downloading the necessary Docker images and creating the containers. So for moving the web services, I don't need to transfer the images or containers, as they can be recreated using the docker-compose.yml files. It's different with the container folders or volumes, these contain all the persistent data of my containers and reflect its data state.

rsync and bind mounts

Those who do not use volumes but bind mounts can store the folders on the host file system and view and manage them with its tools. For Linux-based operating systems, its folders can be transferred to another host very easily and quickly with rsync. With the right parameters, rsync transfers all data on the first call and only the changed data on subsequent calls, which minimizes the time considerably. On my last server I was able to synchronize about 20GByte of data in less than a minute.

Here is an example to copy folders using rsync:

sudo rsync -rltD --delete -e ssh root@IPOLDServer:/var/mydockerfolders/ /var/mydockerfoldersAssuming the appropriate permissions, volumes from the "/var/lib/docker" folder can probably also be transferred with rsync without any problems. I must admit, however, that I have not tested this yet. As /var/lib/docker is managed by docker, directly accessing it will not be the correct way.

Alternative: Shared Storage

An alternative to transferring the data would be to access a storage or NAS centrally. For example, if you use a central network storage, like NFS, and store the data of the containers on it, the new server only needs access to the file system. The containers could thus be stopped at the old server and simply created and started at the new server.

GlusterFS

While searching for an alternative to shared storage, I also briefly got stuck on GlusterFS. GlusterFS can replicate certain folders permanently and synchronously between hosts over the network and thus forms something like a distributed shared storage.

Caution with databases: do not copy during operation

mysqldump

Database files should not simply be copied on the fly, as the copy may not be consistent, see: Docker Backup

Copy stopped Docker container

A very universal variant to transfer all data consistently is to stop the containers at the source server and copy them while they are off. Of course, there is a short downtime with this variant. For moving websites, this downtime could possibly be accepted, since the services on the old server may have to be stopped briefly anyway for the switch to the new server. For a backup this variant is less suitable, since this should take place regularly: Not everyone needs to know that the website is being backed up.

Changing the access to the server

There are several ways to change the access from the old to the new server:

DNS change

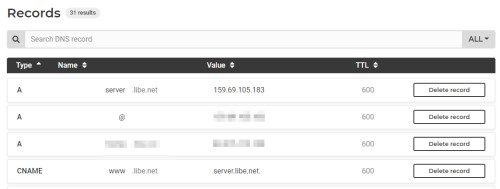

Probably the simplest oldest variant is to change the DNS entries to the IP address of the new server:

See also: Forward traffic to another server. The next two variants I do not use myself, but for the sake of completeness they should not be missing:

Apply IP address to the new server

Certain providers offer a possibility to change the IP address from one server to the other: Floating IP.

Alternatively, depending on the provider, an IP can possibly be transferred to a new server:

Use a load balancer

If a load balancer of a provider is used, it distributes the accesses to different servers. Since the access is to the load balancer, its IP address remains when the servers are exchanged.

Docker Swarm

Before moving my web server this time, I tested Docker Swarm. With Docker Swarm, containers are distributed across different hosts by connecting them over a shared network. For example, a web container could be running on one host and its database container on another. Docker Swarm solves the problem with access, as all containers are accessible through all hosts in a shared swarm. Docker Swarm only cares about the network, not the volumes being used. For this reason, Swarm can be used for containers that either do not need to store persistent data, or in combination with centralized network storage: shared storage, NAS, or distributed centralized storage, such as GlusterFS. Since I've been getting by with one virtual server so far, Docker-Swarm would produce more overhead than benefit in my case, although the shared network could certainly help when moving the server. Looking for an alternative way to route accesses from one server to another I found Traefik, see: Traefik: Forwarding traffic to another server. For transferring the data I simply used the rsync command.

See also, Docker Compose vs. Docker Swarm: using and understanding it

Forwarding traffic

A wide possibility to change the access to another server is to forward all requests to the new server, see.: Traefik: Forwarding traffic to another server. To minimize the time for the server change, I forwarded the traffic during my last server move until the DNS change was known everywhere.

In practice: my last server move

My simple setup: Folders (bind mounts) and no volumes.

In my setup, I created the /var/web folder on the server, and below that, I created the individual web services in their own subfolders.

As an example:

- /var/web/libe.net and

- /var/web/script-example.com

The root folder of the web services contains the respective docker-compose file of each site and below that the folders with the persistent data:

- /var/web/libe.net/docker-compose.yml

- /var/web/libe.net/www ... Folder with the site data

- /var/web/libe.net/db ... Folder with the database files

With this folder structure all relevant data is centrally located below the /var/web folder. Since /var/web is the only folder on the server that requires protection, it is sufficient to back it up. When moving the server, it is also sufficient to stop all containers and transfer only the /var/web folder to the new server and restart all services there with docker-compose. Access from the Internet to the individual websites is controlled by the Traefik Reverse Proxy.

Keep the downtime as low as possible

My original idea for the data transfer was to simply copy the contents of all container folders from the old server to the new one using the rsync command and then start the containers on the new server. With this variant, I could start all websites on the new server in parallel without downtime and then change the DNS to the new server. However, this approach has disadvantages: First, simply copying databases while they are running is somewhat problematic, see: mysql .Second, as long as the DNS is not active everywhere, there is a certain transition period where queries would end up on both servers.

At the expense of downtime, this time I stopped all containers, then transferred the services and subsequently forwarded all accesses from the old web server to the new one. I have thought of the following procedure for moving:

| Existing server | new server |

|---|---|

| 1) stop all docker containers | 3) stop all containers |

| 2) start TCP redirection | 4) copy all changed data |

| 5) start all containers |

To stop all containers at the source server, the following command can be used:

docker kill $(docker ps -q)Forwarding all requests can be done with the following setup: Traefik: Forward traffic to another server.

At last I stopped all containers at the target server for safety reasons, transferred the last changes of the folders with rsync and then started all containers. To keep the downtime as low as possible, I wrote the commands in a bash file:

File: target-actions.sh

#!/bin/bash

docker kill $(docker ps -q)

rsync -rltD --delete -e ssh root@IPoldServer:/var/web/ /var/web

cd /var/web/traefik

docker compose up -d

cd /var/web/website1

docker-compose -d

cd /var/web/website2

docker-compose -dStart the bash file:

chmod +x target-actions.sh

. /target-actions.shAfter running the bash file, the containers run on the new server. So that access takes place directly to the new server, the DNS entry can now be changed to the IP address of the new server. When all containers are running on the new server and no more relevant accesses are redirected via the old container, the old server can be switched off and deleted.

Video

new server: my additional packages

In addition to Docker, I use monitoring with Glances and EARLYOOM on the web server.

Monitoring

Monitoring systems: Monitoring in HomeAssistant with Glances

Using a web server with relatively little memory: RAM

If a Linux server reaches the limit of memory, EARLYOOM may still be able to keep it alive. EARLYOOM is able to stop certain services when the memory runs out: When Ubuntu stops responding: Linux Memory Leak.

Conclusion

With multiple servers and a corresponding cluster setup, the containers could certainly be moved even more easily, but this also requires more resources and a more complex and cost-intensive setup. Those who only use one server for their websites can transfer existing Docker containers to another server with a simple rsync command and only have to change the access to it afterwards. Rsync can also be used as a simple backup, see: Docker Backup

({{pro_count}})

({{pro_count}})

{{percentage}} % positive

({{con_count}})

({{con_count}})